Your new best friend is in the body of a two-inch AI-powered device hung around your neck according to Avi Schiffmann, CEO of Friend. Like a real person, the pendant constantly listens to your daily interactions, conversations, and activities via built-in microphones, then it sends you personalized messages via an accompanied app to keep you company.

However, as a part of Friend’s subway advertising campaign (the largest in New York City ever), 1,000 platform posters, 11,000 subway car ads, and 130 urban panels were defaced with graffiti overnight. In West Fourth Street, one graffitist wrote, “AI would not care if you lived or died.”

Immense backlash has hounded Schiffmann due to Friend, but in an interview with AdWeek, he said that it’s “a big hit amongst the right people.” It’s hard to imagine how a silicone, anthropomorphized chatbot could attract anyone, but considering the eager integration of AI in recent technology, a future akin to a “Black Mirror” episode may not be too brash to expect. And it certainly won’t be a fun one.

The worrying problem about AI is how quickly the technology seems to be replacing humans—not our bodies, but our connection with each other.

Instead of utilizing research skills to develop an argument, students are chasing after ChatGPT to think of it for them. In a social media post’s comment section, many will use Grok to generate a quick, simplified answer to their question instead of communicating with countless human users. Why collaborate with a group of writers, artists, animators, and voice actors when a cartoon can be generated by Sora alone for fast, easy, mindless entertainment?

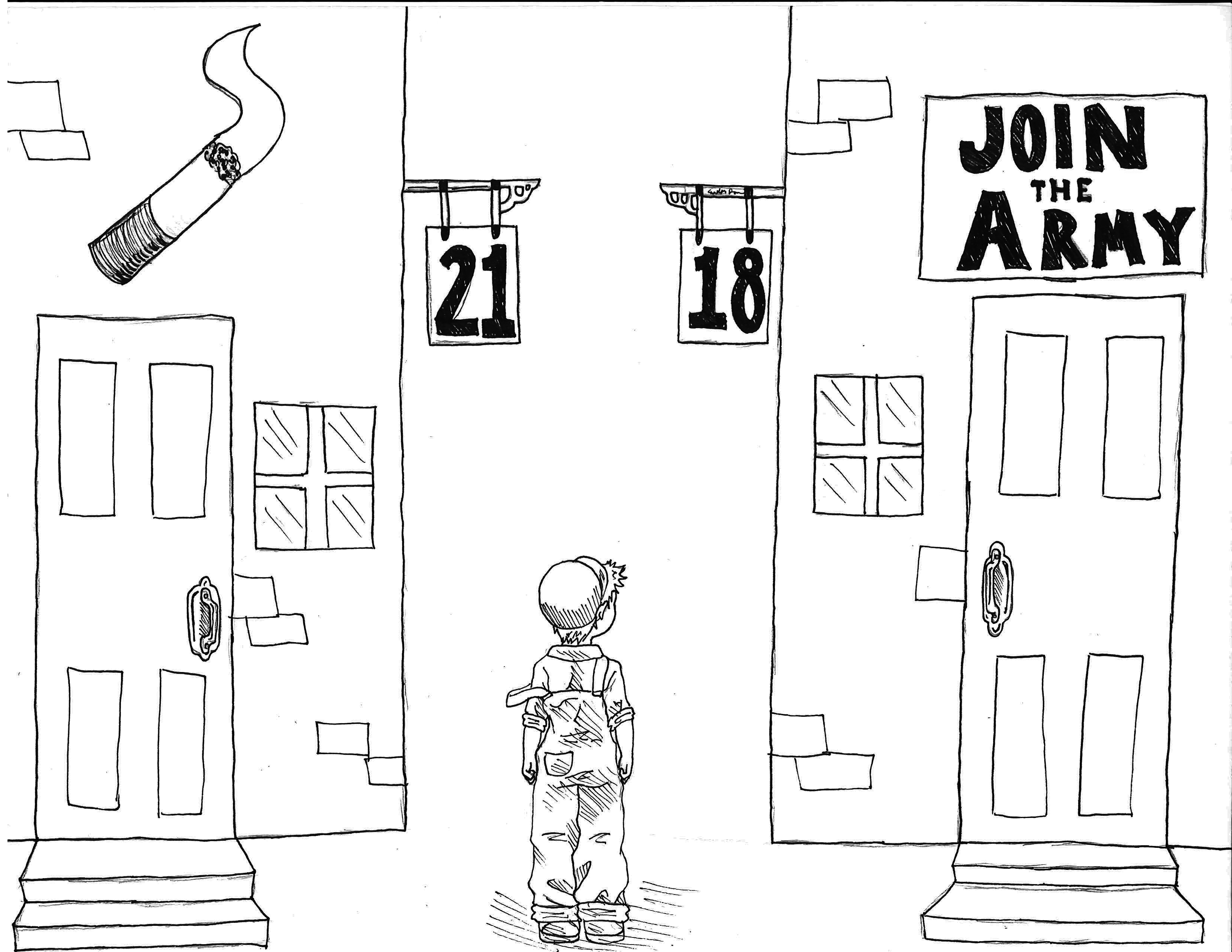

Friend takes this problem and elevates it, introducing a new concern: emotional connection among teens. The Federal Trade Commission recently launched an investigation into several tech companies because of the suicidal ideation and sexual exploitation that is prompted by AI chatbots. Since 2023, three minors have committed suicide after developing strong emotional dependencies on Character.ai and ChatGPT, the youngest being 13 years old.

Adults are also developing romantic and sexual relationships with AI chatbots, one 46-year-old woman saying it was “overwhelming and biologically real as falling in love with a person.” She and her partner of 13 years separated because of this.

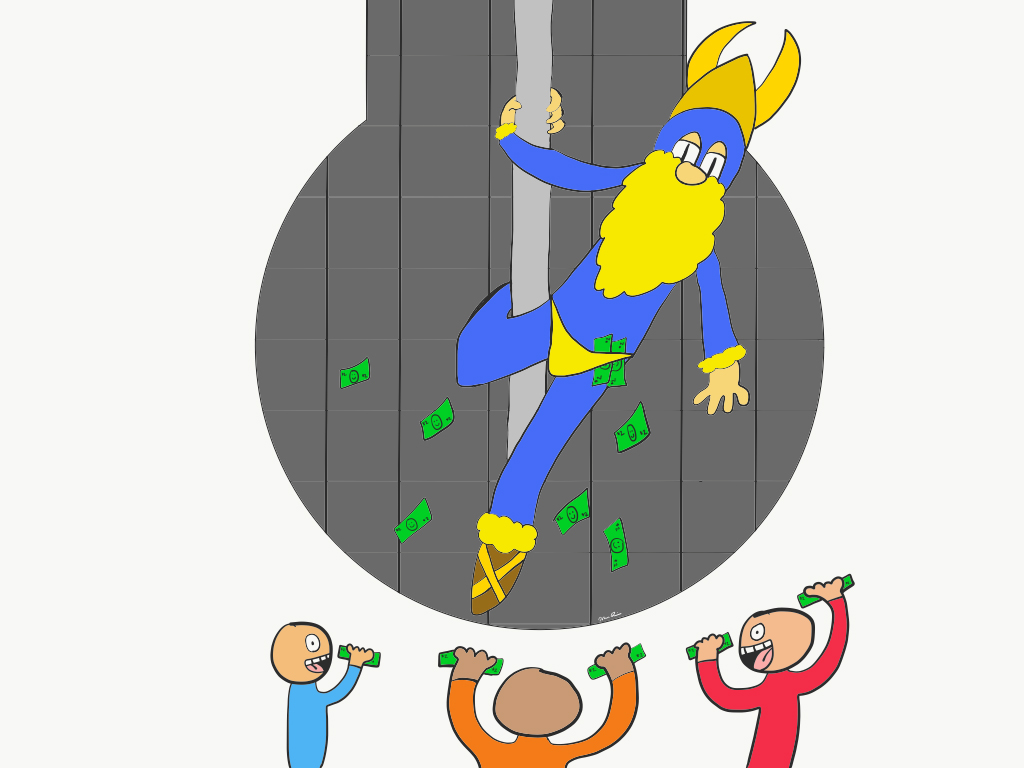

Now, who exactly are the “right people,” according to Schiffmann? Replacing humans (and, for some, therapists) with an AI companion strapped around your neck is not right nor a reality we can accept. AI psychosis, a term attributed to chatbots supporting and amplifying users’ delusions, from religious to romantic, continues to be reported across media platforms. In an effort to make generative AI more realistic and advanced, society is destined to become less dependent on one another for human relationships and communication, and we are allowing it to happen.

Society is growing more apathetic daily, and AI serves as a distraction to the solution: connection with the real people around us.

To be human is to feel, to discuss issues with those who are similar and different than us, to deal with internal struggles, to express ourselves with art, activism, anger, exhilaration, words, and community. AI, first of all, cannot feel.

We must refuse to a future where our companion or partner is a soulless piece of silicone sold for $129. No better time than to begin now.